Bridging the Gap: Why Clinician Trust is Key to Implementing AI in Mental Health

By Oliver Higgins

Artificial intelligence (AI) and machine learning (ML) are rapidly emerging fields promising innovative solutions across healthcare. Our recent integrative review, published in the International Journal of Mental Health Nursing, investigated the current evidence base for incorporating AI/ML-based Decision Support Systems (DSS) into mental health care settings.

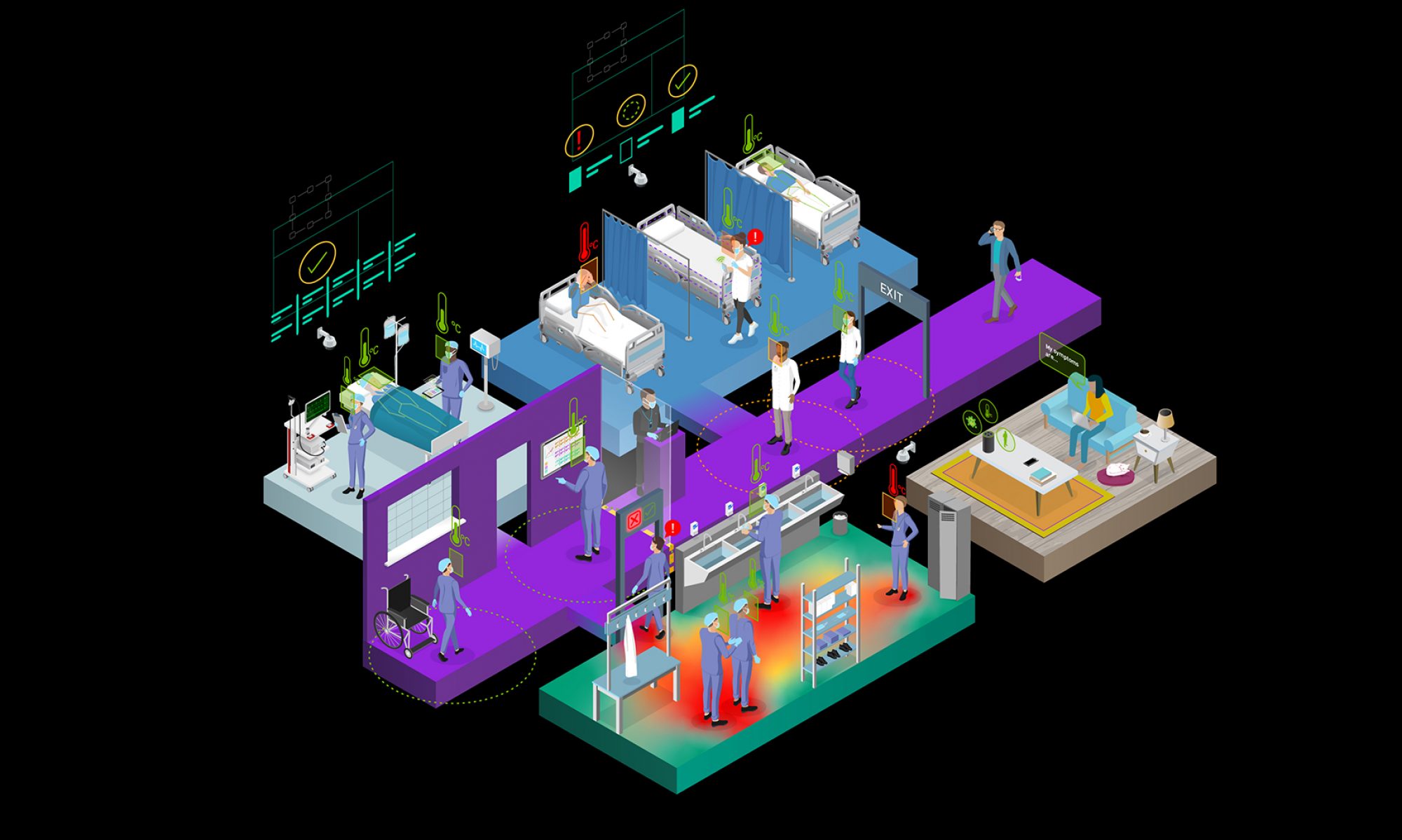

While AI/ML DSS offer significant potential to alleviate systemic problems like clinician burnout, resource burden, and the resulting phenomenon of unfinished or missed care, particularly in acute care settings, our findings indicate that the successful integration of these technologies hinges on a single, crucial factor: trust and confidence.

The Urgent Need for Support

The escalating demand on health care resources, evidenced by increased emergency presentations and longer patient stays in Australian public hospitals prior to 2019–20, has led to clinician burnout, frustration, and reduced productivity. This resource burden necessitates prioritization strategies, often resulting in patients’ educational, emotional, and psychological needs going unmet—a form of missed care.

AI/ML powered DSS represent a possible partial solution by supporting clinicians with tools to accelerate decision-making and identify areas like potential suicidal behaviours from existing clinical notes. However, the research in this area remains limited, with our review identifying only four studies meeting the inclusion criteria between 2016 and 2021.

The Barrier of the ‘Black Box’

The primary theme identified as a significant barrier to the adoption of AI/ML DSS is the uncertainty surrounding clinician’s trust and confidence, end-user acceptance, and system transparency.

Many advanced AI implementations are inherently complex, employing learning algorithms that can obscure the rationale behind a recommendation. This results in what is known as a ‘black-box AI,’ leading to reluctance and anxiety among clinicians who cannot understand the logic or process used by the system to devise a recommendation. Clinicians require an understanding of the data and features used to make predictions, mirroring the standards of clinical documentation.

Several studies highlighted that clinicians need systems that offer transparent and interpretable results. Meaningful explanations about recommendations are required to enhance levels of trust and confidence in AI/ML DSS.

- Lutz et al. (2021) found that presenting recommendations via boxplots, rather than clear binary outputs with supporting evidence, made conclusions difficult for clinicians to interpret and communicate.

- The inability of a system to communicate its underlying mechanism or process contributes directly to clinician mistrust.

To overcome the black-box challenge, systems should utilize glass-box design methodologies, such as InterpretML, which allow for interpretable models by revealing what the machine has ‘learnt’ from the data. Any AI/ML system intended for clinical use must be intelligible, interpretable, transparent, and clinically validated, providing a clear explanation of how clinical features contributed to the recommendation.

Collaborative Development and Clinician Autonomy

A crucial finding is the necessity of involving clinicians in all stages of research, development, and implementation of artificial intelligence in care delivery.

When clinicians are involved in the iterative design cycle, the resulting tool is more likely to foster trust and confidence. For instance, Benrimoh et al. (2020) reported positive findings linked to clinician involvement in the DSS design, noting that the system ensured clinician autonomy by allowing them to select treatments or actions beyond the DSS recommendations when clinically appropriate. This retention of clinical autonomy is essential, as the final clinical decision logic must always remain in the hands of the clinician.

Earning the trust and confidence of clinical staff must be foremost in the consideration for implementation of any AI-based decision support system. Clinicians should be motivated to actively embrace the opportunity to contribute to the development of new digital tools that assist in identifying missed care.

Looking Ahead: End-User Acceptance and Ethical Implications

While limited, the literature does suggest positive benefits for patients. Patients reported feeling more engaged in shared decision-making when clinicians shared the DSS interface with them. The introduction of the tool was also reported to improve the patient-clinician relationship significantly.

However, the attitudes and acceptance of AI/ML among service users—those with lived experience of mental illness, are still poorly understood, and their involvement in research design is severely limited. Future research must focus on understanding how the use of AI/ML affects these populations and address potential risks like techno-solutionism, overmedicalisation, and discrimination.

In conclusion, AI/ML Decision Support Tools in mental health settings show most promise when the trust and confidence of clinicians is achieved. Successful integration requires systems that are transparent, interpretable, and developed through strong collaboration with both clinicians and end-users, ensuring that an algorithm’s performance translates into improved patient outcomes and safe, ethical practice.

Higgins, O., Short, B. L., Chalup, S. K. and Wilson, R. L. (2023). Artificial intelligence (AI) and machine learning (ML) based decision support systems in mental health: An integrative review. International Journal of Mental Health Nursing.